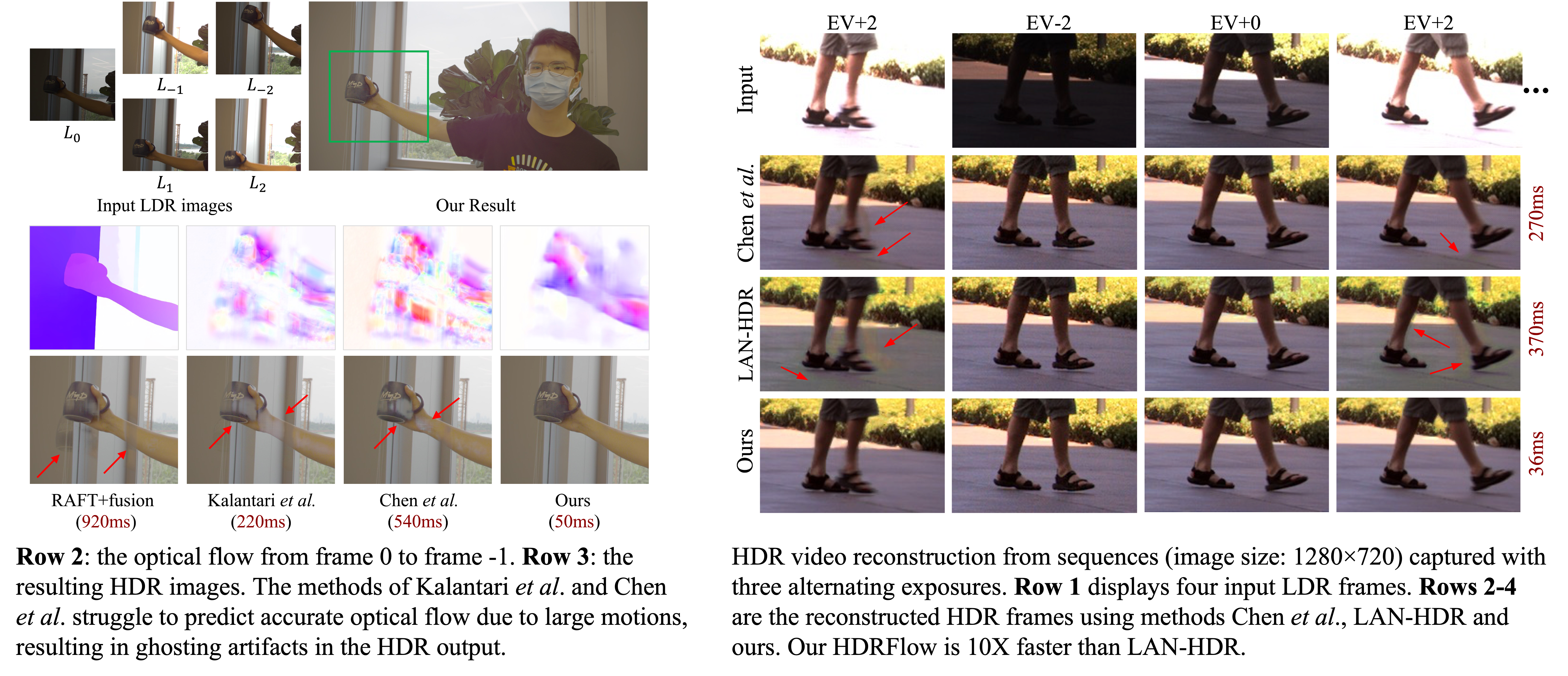

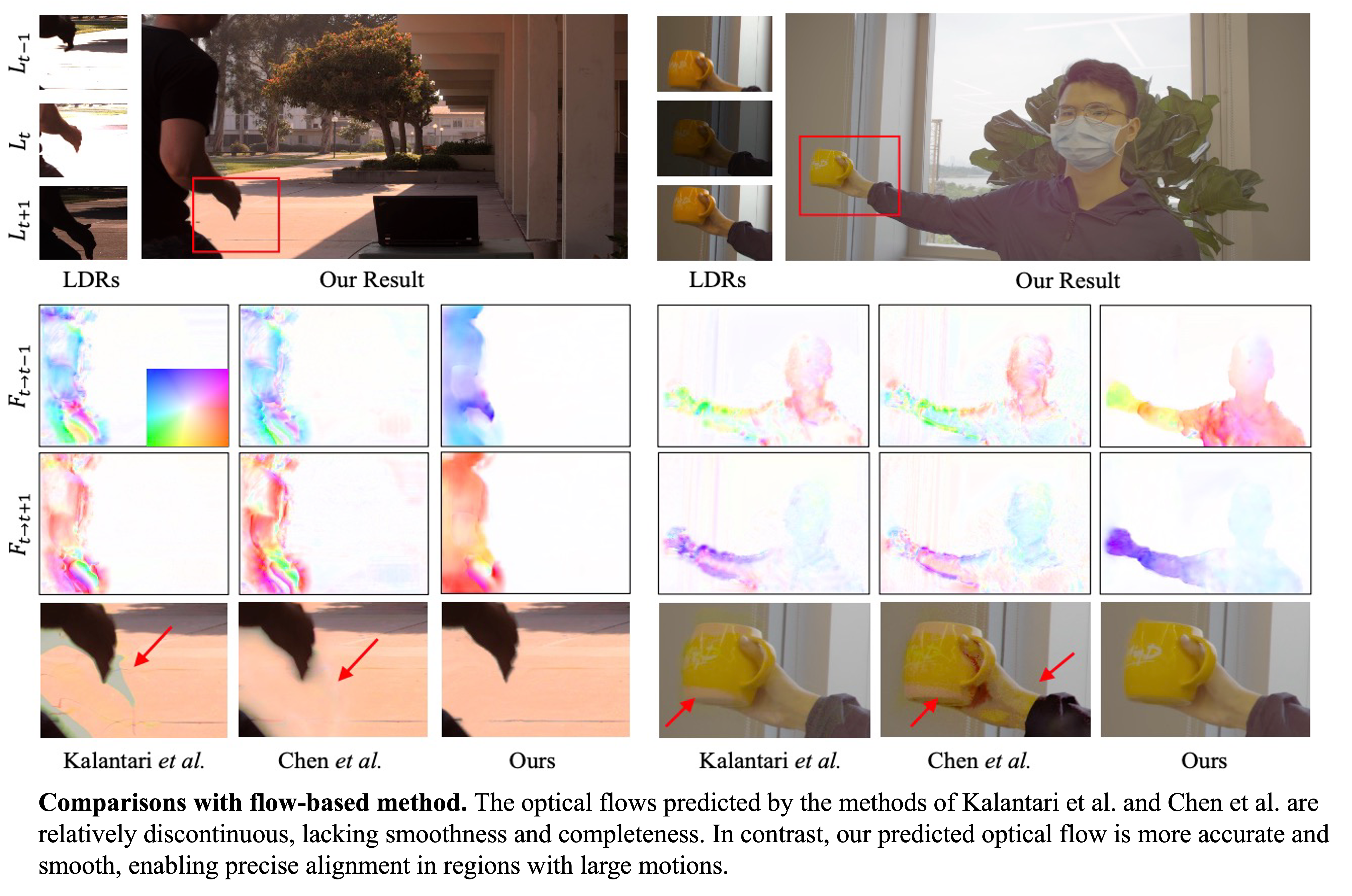

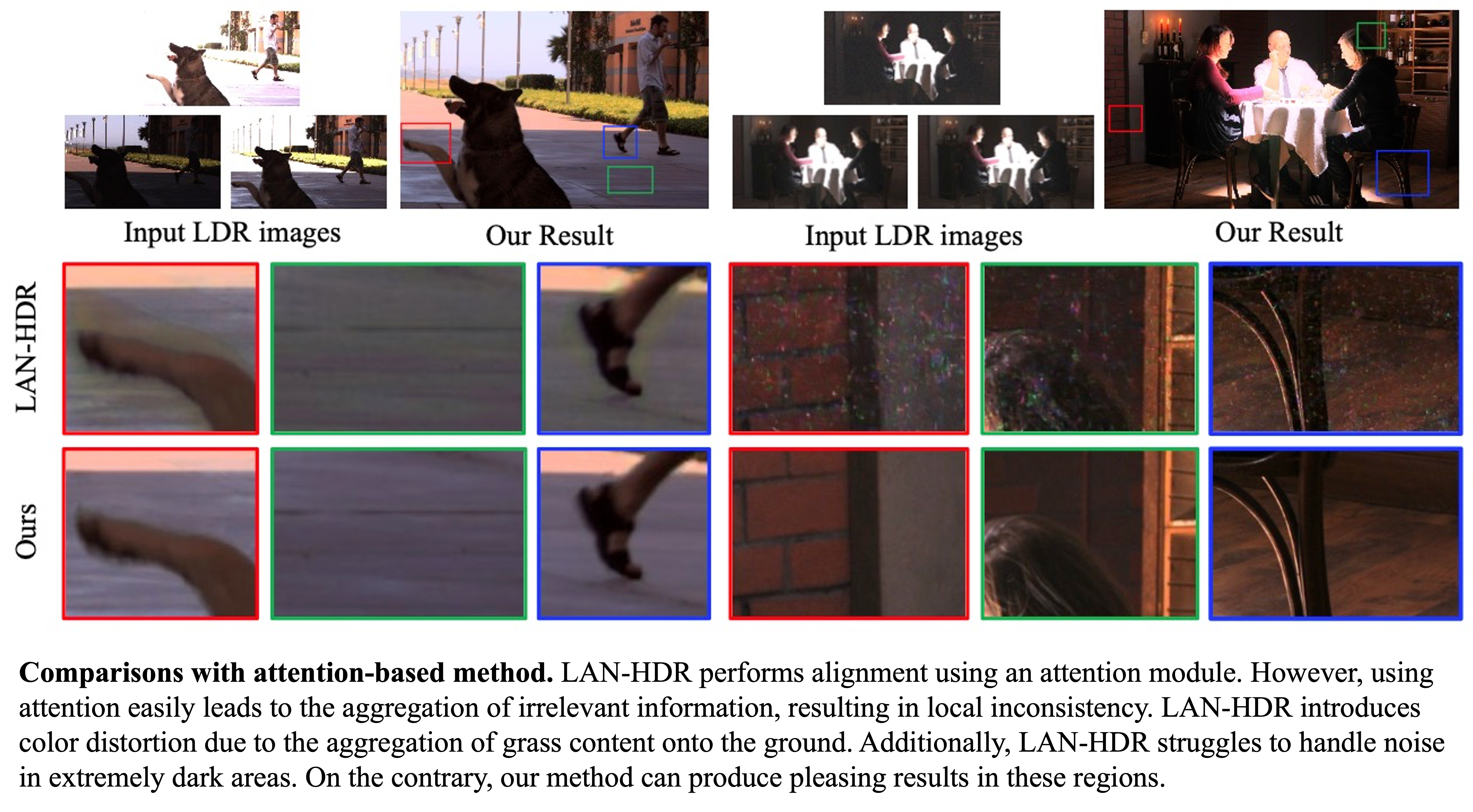

HDR video reconstruction from sequences captured with two alternating exposures. LAN-HDR[ICCV 2023] performs alignment using an attention module. However, using attention easily aggregates irrelevant information, resulting in local inconsistency. Our HDRFlow can produce pleasing results in these regions.

Abstract

Reconstructing High Dynamic Range (HDR) video from image sequences captured with alternating exposures is challenging, especially in the presence of large camera or object motion. Existing methods typically align low dynamic range sequences using optical flow or attention mechanism for deghosting. However, they often struggle to handle large complex motions and are computationally expensive. To address these challenges, we propose a robust and efficient flow estimator tailored for real-time HDR video reconstruction, named HDRFlow. HDRFlow has three novel designs: an HDR-domain alignment loss (HALoss), an efficient flow network with a multi-size large kernel (MLK), and a new HDR flow training scheme. The HALoss supervises our flow network to learn an HDR-oriented flow for accurate alignment in saturated and dark regions. The MLK can effectively model large motions at a negligible cost. In addition, we incorporate synthetic data, Sintel, into our training dataset, utilizing both its provided forward flow and backward flow generated by us to supervise our flow network, enhancing our performance in large motion regions. Extensive experiments demonstrate that our HDRFlow outperforms previous methods on standard benchmarks. To the best of our knowledge, HDRFlow is the first real-time HDR video reconstruction method for video sequences captured with alternating exposures, capable of processing 720p resolution inputs at 25ms.

BibTeX

@inproceedings{xu2024hdrflow,

title={HDRFlow: Real-Time HDR Video Reconstruction with Large Motions},

author={Xu, Gangwei and Wang, Yujin and Gu, Jinwei and Xue, Tianfan and Yang, Xin},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={24851--24860},

year={2024}

}